A Brief History

of IQ Tests

A chance conversation between two strangers on a train.

And the rest, as they say, is history!

A Brief History of IQ Tests

|

The concept of measuring intelligence has fascinated and challenged scientists, psychologists, and educators for over a century. The development of the intelligence quotient (IQ) test has a rich history, marked by both achievements and controversies. |

|

|

Origins and Early Development The journey of the modern IQ test began in the early 20th century with the French psychologist Alfred Binet. Commissioned by the French government in 1904 to identify students who needed educational assistance, Binet, along with his colleague Théodore Simon, developed the Binet-Simon scale. This was the first test designed to measure intellectual abilities, focusing on verbal abilities, memory, attention, and problem-solving skills. |

Standardization and the Stanford-Binet In 1916, the Binet-Simon scale was revised by Lewis Terman at Stanford University, henceforth known as the Stanford-Binet Intelligence Scales. Terman introduced the concept of the intelligence quotient, a score obtained by dividing a person’s mental age by their chronological age, then multiplying the quotient by 100. This method normalized scores and allowed for the comparison across age groups. |

|

World War and the Army Alpha and Beta Tests The outbreak of World War I created a need for a rapid assessment of recruits’ mental abilities. In response, Robert Yerkes developed the Army Alpha and Beta tests in 1917. These were the first mass-administered IQ tests, designed to screen thousands of soldiers. The Alpha test was text-based, while the Beta was visual, designed for illiterate recruits and non-English speakers. |

Expansion and Controversy Throughout the 20th century, IQ testing expanded beyond educational and military settings into the realms of employment and immigration policy. However, it wasn't without its criticisms. Many criticized the tests for cultural and socio-economic biases, arguing that they favored certain groups over others and were not a true measure of an individual's potential or intelligence. |

| Modern Developments In the latter half of the century, new theories emerged that challenged the traditional concept of a single, measurable intelligence. Howard Gardner’s theory of multiple intelligences, proposed in 1983, argued that intelligence is not a single general ability, but a combination of many intelligent behaviors. Despite these theories, IQ tests remain widely used today, though often in conjunction with other assessments to provide a more comprehensive view of an individual’s abilities | |

Defining IQ

|

Intelligence Quotient (IQ) is a numerical expression derived from a standardized assessment designed to measure human intelligence relative to others of the same age. The concept of IQ emerged from the early work of French psychologist Alfred Binet, who, in the early 1900s, was tasked by the French government with identifying students who required additional academic support. Binet's approach involved creating a test that could gauge a child's mental abilities compared to their peers. He introduced the idea of a "mental age," which reflected the level at which a child was functioning intellectually, compared to the average performance of children at that same chronological age. |

Alfred Binet (8 July 1857 - 18 October 1911 ) |

|

William Stern (April 29, 1871 - March 27, 1938) |

The term "IQ" was coined by German psychologist William Stern, who proposed calculating this ratio of mental age to chronological age and multiplying it by 100 to eliminate decimals. For example, if a 10-year-old child had the mental capabilities typical of an 8-year-old, their IQ would be calculated as (8/10) × 100, resulting in an IQ of 80. This formula provided a straightforward way to quantify cognitive abilities relative to age. |

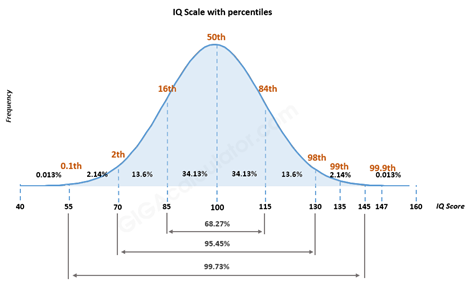

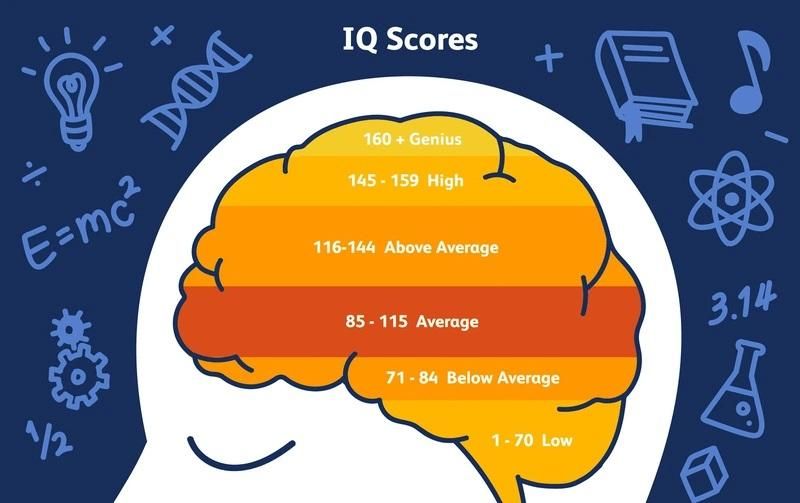

| AAs intelligence testing evolved, the original formula was replaced with modern techniques that account for the statistical distribution of intelligence in the general population. Today, IQ scores are typically normalized so that the average score for any given age group is set at 100, with a standard deviation of 15. This means that approximately 68% of people score within one standard deviation of the mean (between 85 and 115), and about 95% score within two standard deviations (between 70 and 130). |  |

|

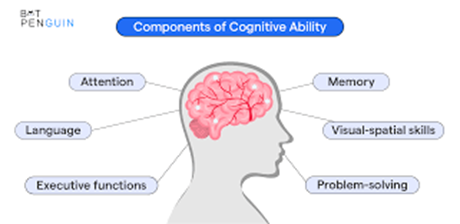

Modern IQ tests are designed to assess a range of cognitive abilities, including verbal reasoning, mathematical problem-solving, spatial awareness, memory, and processing speed. These tests provide a composite score that reflects an individual's overall cognitive functioning, as well as scores for specific cognitive domains. |

|

Modern IQ tests are designed to assess a range of cognitive abilities, including verbal reasoning, mathematical problem-solving, spatial awareness, memory, and processing speed. These tests provide a composite score that reflects an individual's overall cognitive functioning, as well as scores for specific cognitive domains.

However, defining IQ solely through test scores can be misleading. The tests themselves, while rigorously developed, are not without limitations. They are based on a specific understanding of intelligence, primarily centered on analytical and logical reasoning abilities. Consequently, these tests may not fully capture other dimensions of intelligence, such as creativity, practical problem-solving, emotional intelligence, and social understanding. Moreover, cultural and linguistic biases can affect test performance, potentially skewing the results for individuals from diverse backgrounds.

Thus, while IQ offers a quantifiable measure of certain cognitive abilities, it represents only one aspect of human intelligence. The complexity of the human mind, with its vast array of capabilities and talents, cannot be fully encapsulated in a single score. |

|